Author's posts

Jun 26 2013

Posting abroad: my book reviews at ScraperWiki

It’s been a bit quiet on my blog this year, this is partly because I’ve got a new job at ScraperWiki. This has reduced my blogging for two reasons, the first is that I am now much busier but the second is that I write for the ScraperWiki blog. I thought I’d summarise here what I’ve done there just to keep everything in one place.

There’s a lot of programming and data science in my new job , so I’ve been reading programming and data analysis books on the train into work. The book reviews are linked below:

- R in Action by Robert I. Kabacoff;

- Data Visualization: a successful design process by Andy Kirk;

- Machine Learning in Action by Peter Harrington;

- JavaScript: The Good Parts by Douglas Crockford;

- Interactive Data Visualization for the web by Scott Murray;

- Natural Language Processing with Python by Steven Bird, Ewan Klein & Edward Loper;

I seem to have read quite a lot!

Related to this is a post I did on Enterprise Data Analysis and visualisation: An interview study, an academic paper published by the Stanford Visualization Group.

Finally, I’ve been on the stage – or at least presenting at a meeting – I spoke at Data Science London a couple of weeks ago about Scraping and Parsing PDF files. I wrote a short summary of the event here.

Jun 25 2013

Book review: Natural Language Processing with Python by Steven Bird, Ewan Klein & Edward Loper

This post was first published at ScraperWiki.

I bought Natural Language Processing in Python by Steven Bird, Ewan Klein & Edward Loper for a couple of reasons. Firstly, ScraperWiki are part of the EU Newsreader Project which seeks to make a “history recorder” using natural language processing to convert large streams of news articles into a more structured form. ScraperWiki’s role in this project is to scrape open sources of news related material, such as parliamentary records and to drive exploitation of the results of this work both commercially and through our contacts in the open source community. Although we’re not directly involved in the natural language processing work it seems useful to get a better understanding of the area.

Secondly, I’ve recently given a talk at Data Science London, and my original interpretation of the brief was that I should talk a bit about natural language processing. I know little of this subject so thought I should read up on it, as it turned out no natural language processing was required on my part.

This is the book of the Natural Language Toolkit Python library which contains a wide range of linguistic resources, methods for processing those resources, methods for accessing new resources and small applications to give a user-friendly interface for various features. In this context “resources” mean the full text of various books, corpora(large collections of text which have been marked up to varying degrees with grammatical and other data) and lexicons (dictionaries and the like).

Natural Language Processing is didactic, it is intended as a text for undergraduates with extensive exercises at the end of each chapter. As well as teaching the fundamentals of natural language processing it also seeks to teach readers Python. I found this second theme quite useful, I’ve been programming in Python for quite some time but my default style is FORTRANIC. The authors are a little scornful of this approach, they present some code I would have been entirely happy to write and describe it as little better than machine code! Their presentation of Python starts with list comprehensions which is unconventional, but goes on to cover the language more widely.

The natural language processing side of the book progresses from the smallest language structures (the structure of words), to part of speech labeling, phrases to sentences and ultimately deriving logical statements from natural language.

Perhaps surprisingly tokenization and segmentation, the process of dividing text into words and sentences respectively is not trivial. For example acronyms may contain full stops which are not sentence terminators. Less surprisingly part of speech (POS) tagging (i.e. as verb, noun, adjective etc) is more complex since words become different parts of speech in different contexts. Even experts sometimes struggle with parts of speech labeling. The process of chunking – identifying noun and verb phrases is of a similar character.

Both chunking and part of speech labeling are tasks which can be handled by machine learning. The zero order POS labeller assumes everything is a noun, the next simplest method is a simple majority voting one which takes the POS tag for previous word(s) and assumes the most frequent tag for the current word based on an already labelled body of text. Beyond this are the machine learning algorithms which take feature sets, including the tags of neighbouring words, to provide a best estimate of the tag for the word of interest. These algorithms include Bayesian classifiers, decision trees and the like, as discussed in Machine Learning in Action which I have previously reviewed. Natural Language Processing covers these topics fairly briefly but provides pointers to take things further, in particular highlighting that for performance reasons one may use external libraries from the Natural Language Toolkit library.

The final few chapters on context free grammars exceeded the limits of my understanding for casual reading, although the toy example of using grammars to translate natural language queries to SQL clarified the intention of these grammars for me. The book also provides pointers to additional material, and to where the limits of the field of natural language processing lie.

I enjoyed this book and recommend it, it’s well written with a style which is just the right level of formality. I read it on the train so didn’t try out as many of the code examples as I would have liked – more of this in future. You don’t have to buy this book, it is available online in its entirety but I think it is well worth the money.

May 25 2013

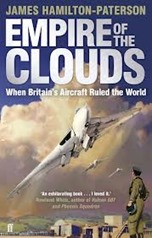

Book review: Empire of the Clouds by James Hamilton-Paterson

Empire of the Clouds by James Hamilton-Paterson, subtitled When Britain’s Aircraft Ruled the World, is the story of the British aircraft industry in the 20 years or so following the Second World War. I read it following a TV series a while back, name now forgotten, and the recommendation of friend. I thought it might fit with the story of computing during a similar period which I had gleaned from A Computer called LEO. The obvious similarities are that at the end of the Second World War Britain held a strong position in aircraft and computer design, which spawned a large number of manufacturers who all but vanished by the end of the 1960s.

Empire of the Clouds by James Hamilton-Paterson, subtitled When Britain’s Aircraft Ruled the World, is the story of the British aircraft industry in the 20 years or so following the Second World War. I read it following a TV series a while back, name now forgotten, and the recommendation of friend. I thought it might fit with the story of computing during a similar period which I had gleaned from A Computer called LEO. The obvious similarities are that at the end of the Second World War Britain held a strong position in aircraft and computer design, which spawned a large number of manufacturers who all but vanished by the end of the 1960s.

The book starts with the 1952 Farnborough Air Show crash in which 29 spectators and a pilot were killed when a prototype de Havilland 110 broke up in mid-air with one of its engines crashing into the crowd. Striking to modern eyes would be the attitude to this disaster – the show went on directly with the next pilot up advised to “…keep to the right side of the runway and avoid the wreckage”. All this whilst ambulances were still converging to collect the dead and wounded. This attitude applied equally to the lives of test pilots, many of whom were to die in the years after the war. Presumably this was related to war-time experiences where pilots might routinely expect to lose a large fraction of their colleagues in combat, and where city-dwellers had recent memories of nightly death-tolls in the hundreds from aerial bombing.

Some test pilots died as they pushed their aircraft towards the sound barrier, the aerodynamics of an aircraft change dramatically as it approaches the speed of sound, making it difficult to control and all at very high speed so if solutions to problems did exist they were rather difficult to find in the limited time available. Black box technology for recording what had happened was rudimentary so the approach was generally to try to creep up on the speeds at which others had come to grief with a hope of finding out what had gone wrong by direct experience.

At the end of the Second World War Britain had a good position technically in the design of aircraft, and a head start with the jet engine. There were numerous manufacturers across the country who had been churning out aircraft to support the war effort. This could not be sustainable in peace time but it was not for quite some time that the required rationalisation was to occur. Another consequence of war was that for resilience to aerial bombing manufacturers frequently had distributed facilities which in peacetime were highly inconvenient, these arrangements appeared to remain in place for some time after the war.

In some ways the sub-title “When Britain’s Aircraft Ruled the World” is overly optimistic, although there were many exciting and intriguing prototype airplanes produced but only a few of them made it to production, and even fewer were commercially, or militarily successful. Exceptions to this general rule were the English Electric Canberra jet-bomber, English Electric Lightning, Avro Vulcan and the Harrier jump jet.

The longevity of these aircraft in service was incredible: the Vulcan and Canberra were introduced in the early fifties with the Vulcan retiring in 1984 and the Canberra lasting until 2006. The Harrier jump jet entered service in 1969 and is still operational. The Lighting entered service 1959 and finished in 1988; viewers of the recent Wonders of the Solar System will have seen Brian Cox take a trip in a Lightning, based at Thunder City where thrill-seekers can play to fly in the privately-owned craft. They’re ridiculously powerful but only have 40 minutes or so of fuel, unless re-fuelled in-flight.

Hamilton-Paterson’s diagnosis is that after the war the government’s procurement policies, frequently finding multiple manufacturers designing prototypes for the same brief and frequently cancelling those orders, were partly to blame for the failure of the industry. These cancellations were brutal: not only were prototypes destroyed, the engineering tools used to make them were destroyed. This is somewhat reminiscent of the decommissioning of the Colossus computer at the end of the Second World War. In addition the strategic view at the end of the war was that there would be no further wars to fight for the next ten years and development of fighter aircraft was therefore slowed. Military procurement has hardly progressed to this day, as a youth I remember the long drawn out birth of the Nimrod reconnaissance aircraft, and more recently there have been mis-adventures with the commissioning of Chinook helicopters and new aircraft carriers.

A second strand to the industry’s failure was the management and engineering approaches common at the time in Britain. Management stopped for two hours for sumptuous lunches every day, it was often autocratic. Whilst American and French engineers were responsive to the demands of their potential customers, and their test pilots the British ones seemed to find such demands a frightful imposition which they ignored. Finally, with respect to civilian aircraft, the state owned British Overseas Airways Corporation was not particularly patriotic in its procurement strategy.

Hamilton-Paterson’s book is personal, he was an eager plane-spotter as a child and says quite frankly that the test pilot Bill Waterson – a central character in the book – was a hero to him. This view may or may not colour the conclusions he makes about the period but it certainly makes for a good read, the book could have been a barrage of detail about each and every aircraft but the more personal reflections, and memories make it something different and more readable. There are parallels with the computing industry after the war, but perhaps the most telling thing is that flashes of engineering brilliance are of little use if they are not matched by a consistent engineering approach and the management to go with it.

May 19 2013

Three years of electronic books

It is customary to write reviews of things when they are fresh and new. This blog post is a little different in the sense that it is a review of 3 years of electronic book usage.

It is customary to write reviews of things when they are fresh and new. This blog post is a little different in the sense that it is a review of 3 years of electronic book usage.

My entry to e-books was with the Kindle: a beautiful, crisp display, fantastic battery life but with a user interface which lagged behind smartphones of the time. More recently I have bought a Nexus 7 tablet on which I use the Kindle app, and very occasionally use my phone to read.

Primarily my reading on the Kindle has been fiction with a little modern politics, and the odd book on technology. I have tried non-fiction a couple of times but have been disappointed (the illustrations come out poorly). Fiction works well because there are just words, you start reading at the beginning of the book and carry on to the end in a linear fashion. The only real issue I’ve had is that sometimes, with multiple devices and careless clicking it’s possible to lose your place; I found this more of a problem than with a physical book. My physical books I bookmark with railtickets, very occasionally they fall out but then I have a rough memory of where they were in the book via the depth axis, and flicking rapidly through a book is easy (i.e. pages per second) – the glimpse of chapter start, the layout of paragraphs is enough to let you know where you are.

There are other times when the lack of a physical presence is galling: my house is full of books, many have migrated to the loft on the arrival of Thomas, my now-toddling son. But many still remain, visible to visitors. Slightly shamefaced I admit to a certain pretention in my retention policy: Ulysses found shelf space for many years whilst science fiction and fantasy made a rapid exit. Nonfiction is generally kept. Books tell you of a persons interests, and form an ad hoc lending library. In the same way as there beaver’s dam is part of its extended phenotype, my books are part of mine. With ebooks we largely lose this display function, I can publish my reading on services like Shelfari but this is not the same a books on shelves. The same applies for train reading, with a physical book readers can see what each other is reading.

Another missing aspect of physicality, I’ve read Reamde by Neal Stephenson a book of a thousand pages, and JavaScript: the Good Parts by Douglas Crockford, only a hundred and fifty or so. The Kindle was the same size for both books! Really it needs some sort of inflatable bladder which inflates to match the number of pages in the book, perhaps deflating as you made your way through the book.

Regular readers of this blog will know I blog what I read, at least for non-fiction. My scheme for this is to read, taking notes in Evernote. This doesn’t work so well on either the Kindle or Kindle app, too much switching between apps. But the Kindle has a notes and highlighting! I hear you say. Yes, it does but it would appear digital rights management (DRM) has reduced its functionality – I can’t share my notes easily and, if your book is stored as a personal document because it didn’t come from the Kindle store then you can’t even share notes across devices. This is a DRM issue because I suspect functionality is limited because without limits you could simply highlight a whole book, or perhaps copy and paste it. And obviously I can’t lend my ebook in the same way as I lend my physical books, or even donate them to charity when I’m finished with them.

This isn’t to say ebooks aren’t really useful – I can take plenty of books on holiday to read without filling my luggage, and I can get them at the last minute. I have a morbid fear of Running Out of Things To Read, which is assuaged by my ebook. In my experience, technology books at the cheaper / lower volume end of the market are also better electronically (and actually the ones I’ve read are relatively unencumbered by DRM), i.e. they come in colour whilst their physical counterparts do not.

Overall verdict: you can pack a lot of fiction onto an ebook but I’ve been using physical books for 40 years and humans have been using them for thousands of years and it shows!

May 09 2013

Book review: Interactive Data Visualization for the web by Scott Murray

This post was first published at ScraperWiki.

Next in my book reading, I turn to Interactive Data Visualisation for the web by Scott Murray (@alignedleft on twitter). This book covers the d3 JavaScript library for data visualisation, written by Mike Bostock who was also responsible for the Protovis library. If you’d like a taster of the book’s content, a number of the examples can also be found on the author’s website.

The book is largely aimed at web designers who are looking to include interactive data visualisations in their work. It includes some introductory material on JavaScript, HTML, and CSS, so has some value for programmers moving into web visualisation. I quite liked the repetition of this relatively basic material, and the conceptual introduction to the d3 library.

I found the book rather slow: on page 197 – approaching the final fifth of the book – we were still making a bar chart. A smaller effort was expended in that period on scatter graphs. As a data scientist, I expect to have several dozen plot types in that number of pages! This is something of which Scott warns us, though. d3 is a visualisation framework built for explanatory presentation (i.e. you know the story you want to tell) rather than being an exploratory tool (i.e. you want to find out about your data). To be clear: this “slowness” is not a fault of the book, rather a disjunction between the book and my expectations.

From a technical point of view, d3 works by binding data to elements in the DOM for a webpage. It’s possible to do this for any element type, but practically speaking only Scaleable Vector Graphics (SVG) elements make real sense. This restriction means that d3 will only work for more recent browsers. This may be a possible problem for those trapped in some corporate environments. The library contains a lot of helper functions for generating scales, loading up data, selecting and modifying elements, animation and so forth. d3 is low-level library; there is no PlotBarChart function.

Achieving the static effects demonstrated in this book using other tools such as R, Matlab, or Python would be a relatively straightforward task. The animations, transitions and interactivity would be more difficult to do. More widely, the d3 library supports the creation of hierarchical visualisations which I would struggle to create using other tools.

This book is quite a basic introduction, you can get a much better overview of what is possible with d3 by looking at the API documentation and the Gallery. Scott lists quite a few other resources including a wide range for the d3 library itself, systems built on d3, and alternatives for d3 if it were not the library you were looking for.

I can see myself using d3 in the future, perhaps not for building generic tools but for custom visualisations where the data is known and the aim is to best explain that data. Scott quotes Ben Schniederman on this regarding the structure of such visualisations:

overview first, zoom and filter, then details on demand