This review was first published at Scraperwiki.

Nate Silver first came to my attention during the 2008 presidential election in the US. He correctly predicted the outcome of the November results in 49 of 50 states, missing only on Indiana where Barack Obama won by just a single percentage point. This is part of a wider career in prediction: aside from a job at KPMG (which he found rather dull), he has been a professional poker player and run a baseball statistics website.

His book The Signal and the Noise: The Art and Science of Prediction looks at prediction in a range of fields: economics, disease, chess, baseball, poker, climate, weather, earthquakes and politics. It is both a review and a manifesto; a manifesto for making better prediction.

The opening chapter is on the catastrophic miscalculation of the default rate for collateralized debt obligations (CDO) which led in large part to the recent financial crash. The theme for this chapter is risk and uncertainty. In this instance, risk means the predicted default rate for these financial instruments and uncertainty means the uncertainty in those predictions. For CDOs the problem was that the estimates of risk were way off, and there was no recognition of the uncertainty in those risk estimates.

This theme of unrecognised uncertainty returns for the prediction of macroeconomic indicators such as GDP growth and unemployment. Here again forecasts are made, and practitioners in the field know these estimates are subject to considerable uncertainty, but the market for prediction, and simple human frailty mean that these uncertainties are ignored. I’ve written elsewhere on both the measurement and prediction of GDP. In brief, both sides of the equation are so fraught with uncertainty that you might as well toss a coin to predict GDP!

The psychology of prediction returns with a discussion of political punditry and the idea of “foxes” and “hedgehogs”. In this context “hedgehogs” are people with one Big Idea who make their predictions based on their Big Idea and are unshiftable from it. In the political arena the “Big Idea” may be ideology but as a scientist I can see that science can be afflicted in the same way. To a man with a hammer, everything is a nail. “Foxes”, on the other hand, are more eclectic and combine a range of data and ideas to predict, as a result they are more successful.

In some senses, the presidential prediction is a catching chickens in a coop exercise. There is quite a bit of data to use and your fellow political pundits are typically misled by their own prejudices, they’re “hedgehogs”, so all you, the “fox” needs to do is calculate in a fairly rational manner and you’re there. Silver returns to the idea of diversity in prediction, combining multiple models, or data of multiple types in his manifesto for better prediction.

There are several chapters on scientific prediction, looking at the predictions of earthquakes, the weather, climate and disease. There is not an overarching theme across these chapters. The point about earthquakes is that precise prediction about where, when and how big appears to be impossible. At short range, the weather is predictable but, beyond a few days, seasonal mean predictions and “the same as today” predictions are as good as the best computer simulations.

Other interesting points raised by this chapter are the ideas of judging predictions by accuracy, honesty and economic value. Honesty in this sense means “is this the best model I can make at this point?”. The interesting thing about weather forecasts in the US is that the National Weather Service makes the results of simulations available to all. Big national value-adders such as the Weather Channel produce a “wet bias” forecast which systematically overstates the possibility of rain for lower values of the chances of rain. This is because customers prefer to be told that there is, say, a 20% chance of rain when it actually turns out to be dry, than be told the actual chance of rain (say 5%) and for it to rain. This “wet bias” gives customers what they want.

Finally, there are predictions on games. On poker, baseball, basketball and chess. The benefits of these systems are the large amounts of data available. The US in particular has a thriving “gaming statistics” culture. The problems are closed, in the sense that there are a set of known rules to each game. And finally, there is ample opportunity for testing predictions against outcomes. This final factor is important in improving prediction.

In technical terms, Silver’s call to arms is for the Bayesian approach to predictions. With this approach, an attempt is made to incorporate prior beliefs into prediction through the use of an estimate of the prior probability and Bayes’ Theorem. Silver exemplifies this with a discussion of prediction in poker. Essentially, poker is about predicting your opponents’ hands and their subsequent actions based on those cards. To be successful, poker players must do this repeatedly and intuitively. However, luck still plays a large part in poker and only the very best players make a profit long term.

The book is structured around a series of interviews with workers in the field, and partly as a result of this it reads rather nicely – it’s not just a dry exposition of facts, but has some human elements too. Though it’s no replacement for a technical reference, it’s worth as a survey of prediction, as well as for the human interest stories.

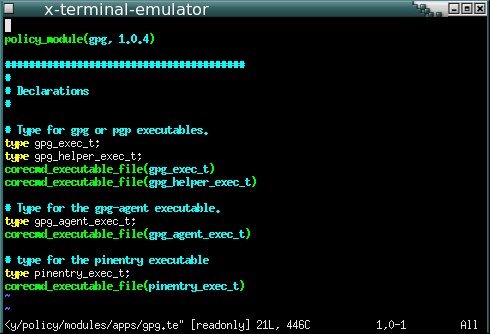

![GNU emacs-[1] GNU emacs-[1]](https://ianhopkinson.org.uk/wp-content/uploads/2014/02/GNU-emacs-1.png)