This review was first published at ScraperWiki.

Following my revelations regarding sharing code with other people I thought I’d read more about the craft of writing code in the form of Clean Code: A Handbook of Agile Software Craftmanship by Robert C. Martin.

Despite the appearance of the word Agile in the title this isn’t a book explicitly about a particular methodology or technology. It is about the craft of programming, perhaps encapsulated best by the aphorism that a scout always leaves a campsite tidier than he found it. A good programmer should leave any code they touch in a better state than they found it. Martin has firm ideas on what “better” means.

After a somewhat sergeant-majorly introduction in which Martin tells us how hard this is all going to be, he heads off into his theme.

Martin doesn’t like comments, he doesn’t like switch statements, he doesn’t like flag arguments, he doesn’t like multiple arguments to functions, he doesn’t like long functions, he doesn’t like long classes, he doesn’t like Hungarian* notation, he doesn’t like output arguments…

This list of dislikes generally isn’t unreasonable; for example comments in code are in some ways an anachronism from when we didn’t use source control and were perhaps limited in the length of our function names. The compiler doesn’t care about the comments and does nothing to police them so comments can be actively misleading (Guilty, m’lud). Martin prefers the use of descriptive function and variable names with a clear hierarchical structure to the use of comments.

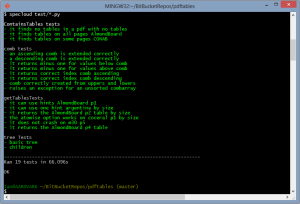

The Agile origins of the book are seen with the strong emphasis on testing, and Test Driven Development. As a new convert to testing I learnt a couple of things here: clearly written tests being as important as clearly written code, the importance of test coverage (how much of you code is exercised by tests).

I liked the idea of structuring functions in a code file hierarchically and trying to ensure that each function operates at a single layer of abstraction, I’m fairly sold on the idea that a function should do one thing, and one thing only. Although to my mind the difficulty is in the definition of “thing”.

It seems odd to use Java as the central, indeed only, programming language in this book. I find it endlessly cluttered by keywords used in the specification of functions and variables, so that any clarity in the structure and naming that the programmer introduces is hidden in the fog. The book also goes into excruciating detail on specific aspects of Java in a couple of chapters. As a testament to the force of the PEP8 coding standard, used for Python, I now find Java’s prevailing use of CamelCase visually disturbing!

There are a number of lengthy examples in the book, demonstrating code before and after cleaning with a detailed description of the rationale for each small change. I must admit I felt a little sleight of hand was involved here, Martin takes chunks of what he considers messy code typically involving longish functions and breaks them down into smaller functions, we are then typically presented with the highest level function with its neat list of function calls. The tripling of the size of the code in function declaration boilerplate is then elided.

The book finishes with a chapter on “[Code] Smells and Heuristics” which summarises the various “code smells” (as introduced by Martin Fowler in his book Refactoring: Improving the Design of Existing Code) and other indicators that your code needs a cleaning. This is the handy quick reference to the lessons to be learned from the book.

Despite some qualms about the style, and the fanaticism of it all I did find this an enjoyable read and felt I’d learnt something. Fundamentally I like the idea of craftsmanship in coding, and it fits with code sharing.

*Hungarian notation is the habit of appending letter or letters to variables to indicate their type.