I thought I might make a short post about opinion polls, since there’s a lot of them about at the moment, but also because they provide an opportunity to explain experimental errors – of interest to most scientists.

I can’t claim great expertise in this area, physicists tend not to do a great deal of statistics unless you count statistical mechanics which is a different kettle of fish to opinion polling. Really you need a biologist or a consumer studies person. Physicists are all very familiar with experimental error, in a statistical sense rather than the “oh bollocks I just plugged my 110 volt device into a 240 volt power supply” or “I’ve dropped the delicate critical component of my experiment onto the unyielding floor of my lab” sense.

There are two sorts of error in the statistical sense: “random error” and “systematic error”. Let’s imagine I’m measuring the height of a group of people, to make my measurement easier I’ve made them all stand in a small trench, whose depth I believe I know. I take measurements of the height of each person as best I can but some of them have poor posture and some of them have bouffant hair so getting a true measure of their height is a bit difficult: if I were to measure the same person ten times I’d come out with ten slightly different answers. This bit is the random error.

To find out everybody’s true height I also need to add the depth of the trench to each measurement, I may have made an error here though – perhaps a boiled sweet was stuck to the end of my ruler when I measured the depth of the trench. In this case my mistake is added to all of my other results and is called a systematic error.

This leads to a technical usage of the words “precision” and “accuracy”. Reducing random error leads to better precision, reducing systematic error leads to better accuracy.

This relates to opinion polling: I want to know the result of the election in advance, one way to do this would be to get everyone who was going to vote to tell me in advance what their voting intentions. This would be fairly accurate, but utterly impractical. So I must resort to “sampling”: asking a subset of the total voting population how they are going to vote and then by a cunning system of extrapolation working out how everybody’s going to vote. The size of the electorate is about 45million, the size of a typical sampling poll is around 1000. That’s to say one person in a poll represents 45,000 people in a real election.

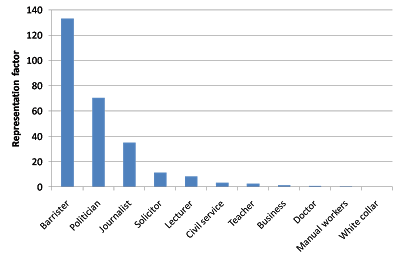

To get this to work you need to know about the “demographics” of your sample and the group you’re trying to measure. Demographics is stuff like age, sex, occupation, newspaper readership and so forth – all things that might influence the voting intentions of a group. Ideally you want the demographics of your sample to be the same as the demographics of the whole voting population, if they’re not the same you will apply “weightings” to the results of your poll to adjust for the different demographics. You will, of course, try to get the right demographics in the sample, but people may not answer the phone or you might struggle to find the right sort of person in the short time you have available.The problem is you don’t know for certain what demographic variables are important in determining the voting intentions of a person. This is a source of systematic error, and some embarrassment for pollsters.

Although the voting intentions of the whole population may be very definite (and even that’s not likely to be the case), my sampling of that population is subject to random error. You can improve your random error by increasing the number of people you sample but the statistics are against you because the improvement in error goes as one over the square root of the sample size. That’s to say a sample which is 100 times bigger only gives you 10 times better precision. The systematic error arises from the weightings, problems with systematic errors are difficult to track down in polling as in science.

So after this lengthy preamble I come to the decoration in my post, a graph: This is a representation of a recent opinion poll result shown in the form of probability density distributions, the area under each curve (or part of each curves) indicates the probability that the voting intention lies in that range. The data shown is from the YouGov poll published on 27th April. The full report on the poll is

here, you can find the weighting they applied on the back page of the report. The “margin of error” of which you very occasionally hear talk gives you a measure of the width of these distributions (I assumed 3% in this case, since I couldn’t find it in the report), the horizontal location of the middle of each peak tells you the most likely result for that party.

For the Conservatives I have indicated the position of the margin of error, the polling organisation believe that the result lies in the range indicated by the double headed arrow with 95% probability. However there is a 5% chance (1 in 20) that it lies outside this range. This poll shows that the Labour and Liberal Democrat votes are effectively too close to call and the overlap with with the Conservative peak indicates some chance that they do not truly lead the other two parties. And this is without considering any systematic error. For an example of systematic error causing problems for pollsters see these wikipedia article on The Shy Tory Factor.

Actually for these data it isn’t quite as simple as I have presented since a reduction in the percentage polled of one party must appear as an increase in the percentages polled of other parties.

On top of all this the first-past-the-post electoral systems means that the overall result in terms of seats in parliament is not simply related to the percentage of votes cast.