Coders can be obsessive about their text editors. Dividing into relatively good natured camps. It is text editors not development environments over which they obsess and the great schism is between is between the followers of vim and those of Emacs. The line between text editor and development environment can be a bit fuzzy. A development environment is designed to help you do all the things required to make working software (writing, testing, compiling, linking, debugging, organising projects and libraries), whilst a text editor is designed to edit text. But sometimes text editors get mission creep.

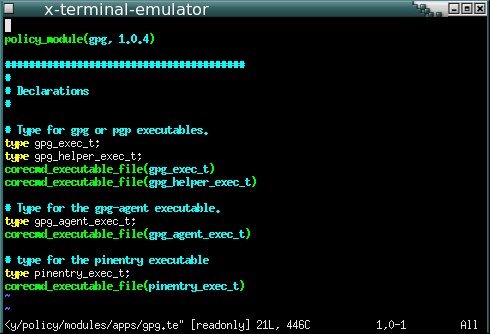

vim and emacs are both editors with long pedigree on Unix systems. vim‘s parent, vi came into being in 1976, with vim being born in 1991, vim stands for “Vi Improved”. Emacs was also born in 1976. Glancing at the emacs wikipedia page I see there are elements of religiosity in the conflict between them.

To users of OS X and Windows, vim and emacs look and feel, frankly, bizarre. They came into being when windowed GUI interfaces didn’t exist. In basic mode they offer a large blank screen with no icons or even text menu items. There is a status line and a command line at the bottom of the screen. Users interact by issuing keyboard commands, they are interfaces with only keyboard shortcuts. It’s said that the best way to generate a random string of characters is to put a class of naive computer science undergraduates down in front of vim and tell them to save the file and exit the program! In fact to demonstrate the point, I’ve just trapped myself in emacs whilst trying to take a screen shot.

vim and emacs are both incredibly extensible, they’re written by coders for coders. As a measure of their flexibility: you can get twitter clients which run inside them.

I’ve used both emacs and vim but not warmed to either of them. I find them ugly to look at and confusing, I don’t sit in front on an editor enough of the day to make remembering keyboard shortcuts a comfortable experience. I’ve used the Matlab, Visual Studio and Spyder IDEs but never felt impassioned enough to write a blog post about them. I had a bad experience with Eclipse, which led to one of my more valued Stackoverflow answers.

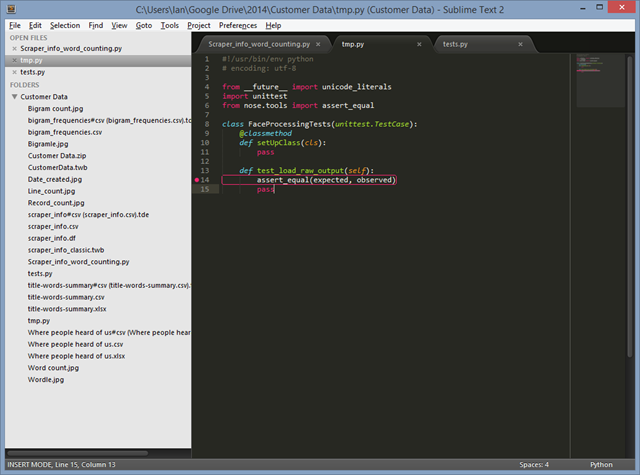

But now I’ve discovered Sublime Text.

Sublime Text is very beautiful, particularly besides vim and emacs. I like the little inset in the top right of my screen which shows the file I’m working on from an eagle’s perspective, the nice rounded tabs. The colour scheme is subtle and muted, and I can get a panoply of variants on the theme. At Unilever we used to talk about trying to delight consumers with our products – Sublime Text does this. My only wish is that it went the way of Google Chrome and got rid of the Windows bar at the top.

Not only this, as with emacs and vim, I can customise Sublime Text with code or use other packages other people have written and in my favoured language, Python.

I use Sublime Text mainly to code in Python, using a Git Bash prompt to run code and to check it into source control. At the moment I have the following packages installed:

- Package Control – for some reasons the thing that makes it easy to add new packages to Sublime Text comes as a separate package which you need to install manually;

- PEP8 Autoformat – languages have style guides. Soft guidelines to ensure consistent use of whitespace, capitalisation and so forth. Some people get very up tight about style. PEP8 is the Python style guide, and PEP8 autoformat allows you to effortlessly conform to the style guide and so avoid friction with your colleagues;

- Cheat Sheets – I can’t remember how to do anything, cheat sheets built into the editor make it easy to find things, and you can add your own cheat sheets too;

- Markdown Preview – Markdown is a way of writing HTML without all the pointy brackets, this package helps you view the output of your Markdown;

- SublimeRope – a handy package that tells you when your code won’t run and helps with autocompletion. Much better than cryptic error messages when you try to run faulty code. I suspect this is the most useful one so far.

- Git and GitGutter – integrating Git source control into the editor. Git provides all the Git commands on a menu whilst GitGutter adds markers in the margin (or gutter) showing the revision status. These work nicely on Ubuntu but I haven’t worked out how to configure them on Windows.

- SublimeREPL – brings a Python prompt into the editor. There are some configuration subtleties here when working with virtual environments.

I know I’ve only touched the surface of Sublime Text but unlike other editors I want to learn more!

![GNU emacs-[1] GNU emacs-[1]](https://ianhopkinson.org.uk/wp-content/uploads/2014/02/GNU-emacs-1.png)